Gen AI-Powered Synthetic Data Generation in Robotics

Innovation in robotics and AI can face significant obstacles: high costs, time-intensive data collection, and safety risks. This is largely due to traditional methods of acquiring real-world data, which often limit progress, delay advancements, and inflate expenses.

SoftServe is integrating NVIDIA Omniverse, a platform of application programming interfaces, software development kits, and services that enable developers to easily integrate Universal Scene Description (OpenUSD) and NVIDIA RTX rendering technologies into existing software tools and simulation workflows for building AI systems.

Omniverse Replicator, a core component of the Omniverse platform, allows us to build custom synthetic data generation (SDG) pipelines for training perception AI models, and NVIDIA Isaac Sim, a reference robotic application built on Omniverse, lets us simulate and validate robots in physically accurate environments. Combined with Generative AI and multimodal data capabilities, these technologies reduce reliance on costly physical data collection and testing.

By leveraging NVIDIA tools and SoftServe’s expertise in a simulation-first approach and NVIDIA technologies, organizations can train, test, and validate robots faster, safer, and more cost-effectively.

Read our white paper

to learn more about how SDG and simulation-first strategiescan give your organization a competitive advantage. DOWNLOAD HERE

The power of synthetic data generation in robotics

SDG helps address some of the most significant challenges in AI training and testing. It’s a scalable, efficient alternative to traditional data collection which often involves high costs, time-consuming processes, and privacy concerns. Generated data mirrors real-world conditions without relying on sensitive or personal information, ensuring compliance with privacy regulations like GDPR and HIPAA.

SDG extends beyond the visual spectrum to include LIDAR, radar, acoustic signals, and range sensors — and creates highly realistic training scenarios for robots operating in complex environments. It enables the testing of rare and edge-case scenarios that are nearly impossible to capture in real life.

Key applications of SDG in robotics

SDG is transforming robotics by reducing data acquisition costs and accelerating AI development. Gartner predicts that by 2030, synthetic data will entirely surpass real data in AI models, as SDG's versatility enables the development of advanced AI models by providing diverse, high-fidelity datasets for a wide range of applications. This shift results in an average ROI boost of 5.9% on AI projects, with top performers reaching 13% ROI, and saves robotics validation engineers up to 46% of their time on testing and validation. Additionally, robotics perception engineers, who typically spend at least 35% of project time collecting and cleaning data, benefit from significantly reduced data preparation time. This means saving time, and significantly cutting storage, processing, and compliance expenses across various industries.

| Application | Description |

|---|---|

| Manufacturing | Simulating rust detection systems with synthetic data to validate AI models under varying conditions. |

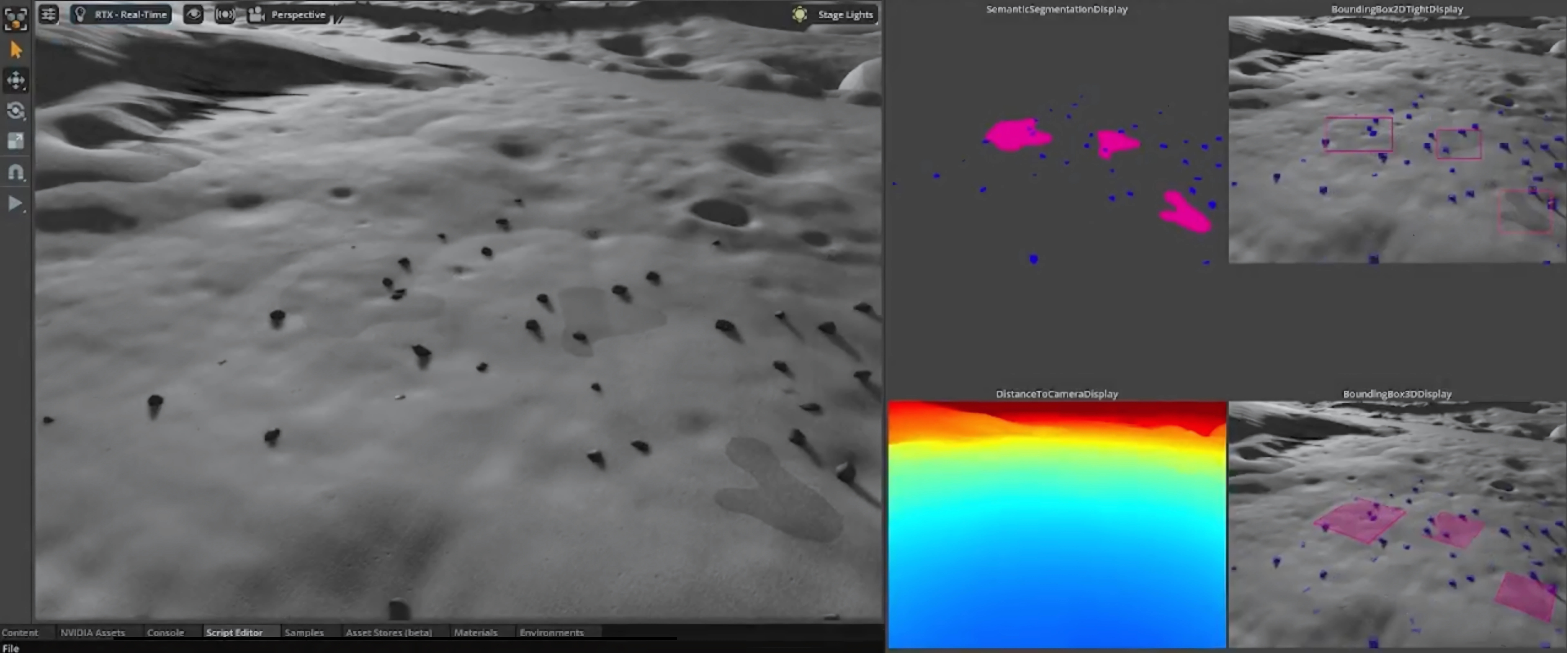

| Space Exploration | Testing lunar drones in simulated moon environments with SDG-created datasets for navigation and detection. |

| Industrial Automation | Optimizing robotic assembly-line movements using SDG to test object manipulation under diverse scenarios. |

| Energy, Oil & Gas | Simulations replicate various operational conditions for robots and boost predictive maintenance and inspection. |

| Automotive | Simulate diverse scenarios for self-driving cars, including challenging weather conditions, varying lighting, and complex road environments. |

| Healthcare | SDG generates synthetic datasets for tasks like anomaly detection in diagnostic imaging and maintains patient privacy while accelerating model development. |

| Agriculture | Train models to optimize crop management, delivering precision and efficiency in controlled environments. |

| Construction and Mining | SDG optimizes robotic operations for site inspections and material handling. |

Augment real-world training data with SDG

Collecting real-world data for training machine learning (ML) models is time-consuming and costly. While real-world data is the gold standard for testing, it often needs supplementation, particularly for rare or edge-case scenarios.

SDG enriches datasets with synthetic examples, eliminating the need for extensive manual collection and labeling. This enhances model robustness by introducing controlled variations in lighting, object positioning, and environments.

Domain randomization and high-fidelity simulations ensure synthetic data closely mimics real-world conditions. SDG creates comprehensive datasets, reduces model bias, and improves generalization while covering scenarios that are difficult to replicate physically.

SDG for perception AI model training without real-world data

Training models exclusively on real-world data can create bottlenecks in the production pipeline, often delaying the ML development cycle. Effectively replacing real-world data hinges on the fidelity of the synthetic data, which must accurately replicate the physical, visual, and functional characteristics of the component being modeled.

Advanced tools like NVIDIA Omniverse Replicator and NVIDIA Warp enable the generation of visual and non-visual spectrum synthetic data — such as LIDAR readings, force/torque data, photorealistic images, labels, cloth, moon soil, stones, animals — helping ensure the data is both diverse and realistic.

SDG accelerates AI development cycles, reduces costs, and meets privacy and compliance requirements. It also provides scalability, enabling the generation of large datasets without physical constraints or resource limitations.

SDG for complex objects

Conventional SDG pipelines are proficient at simulating rigid objects but face limitations when addressing complex interactions between soft and rigid objects, which involve intricate dynamics, deformations, and material properties.

SoftServe's SDG methodologies, enhanced by technologies like NVIDIA Warp, MATLAB, and ANSYS, overcome these challenges by enabling high-fidelity modeling of flexible objects and their interactions with rigid surfaces. These tools simulate nuanced physical behaviors such as elasticity, friction, and structural deformation, ensuring datasets accurately reflect real-world scenarios.

Dynamic object modeling, supported by physics-based simulations in MATLAB and ANSYS, is essential for tasks demanding precision, such as robotic manipulation of soft materials. This approach enables AI models to learn complex behaviors, adapt to dynamic environments, and achieve a higher level of realism and performance.

Case study: Pfeifer & Langen vertical farming project

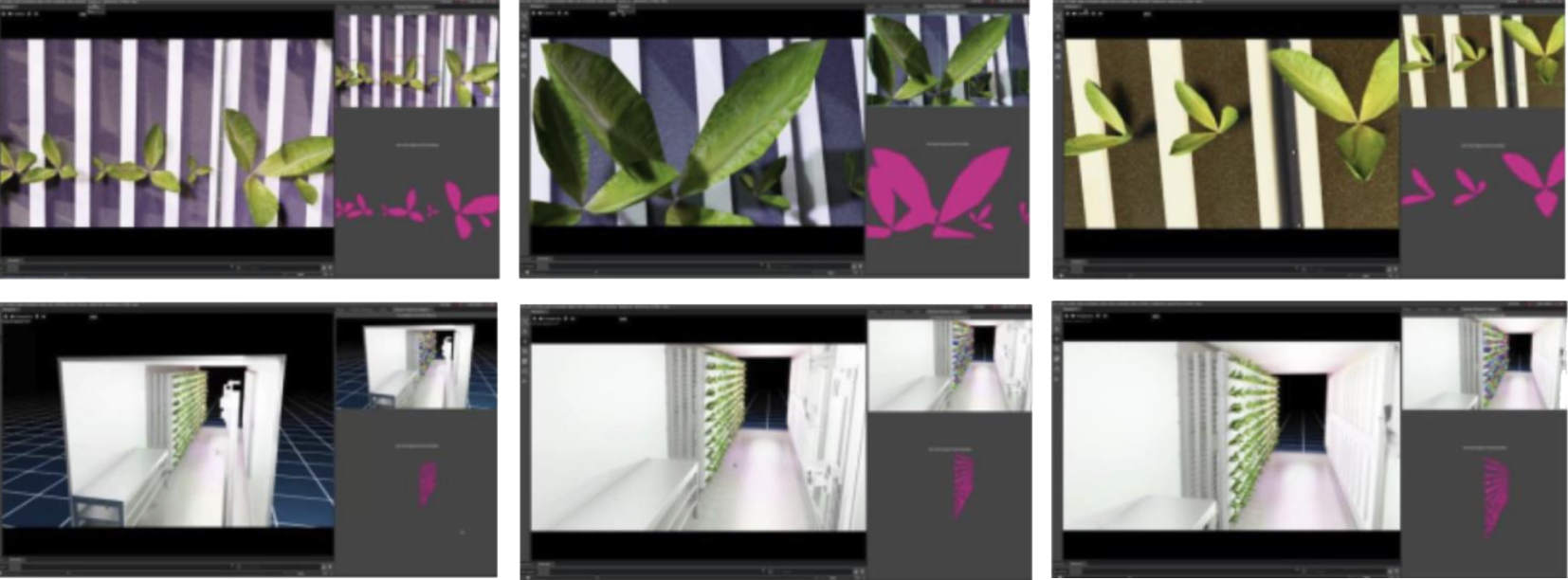

Pfeifer & Langen (P&L), a leading European food producer, aimed at automating manual tasks within vertical farming containers, making them economically attractive. Using NVIDIA Omniverse Replicator, the SoftServe team generated synthetic datasets simulating various agricultural environments, including plant species, growth stages, and lighting conditions. These datasets trained AI models for plant recognition and environmental monitoring.

Isaac Sim was then employed to simulate robotic operations within the containers. The outcomes included reduced operational costs and time-to-market, as well as improved the quality and performance of robotic vision models, and continuous, accurate plant data collection while optimizing vertical farming operations.

By integrating NVIDIA Omniverse Replicator and Isaac Sim, P&L automated its vertical farming operations, showcasing the transformative potential of these advanced technologies in developing and validating robotic systems.

Robotic simulations for validation and testing

Robotic simulations complement SDG by creating environments where synthetic datasets can be used to train, test, and refine AI models under realistic conditions. For instance, synthetic sensor data such as LIDAR or force/torque readings can be integrated into virtual simulations to emulate real-world interactions, enhancing model robustness and readiness.

Virtual commissioning (VC) leverages digital twins to simulate mechanical, electrical, and control components in a virtual setting, validating system behavior early in development. Combined with SDG, this process enables iterative refinement of AI models and robotic systems without the need for costly physical prototypes.

Software-in-the-loop (SIL) and hardware-in-the-loop (HIL) testing extend these capabilities. SIL uses synthetic data to validate software performance in isolation, while HIL incorporates real hardware interacting with synthetic environments to test system integration. Together, these methods help that both hardware and software are optimized for real-world scenarios.

Cloud-based simulations further enhance the role of SDG by enabling scalable, concurrent testing across diverse conditions. This allows for faster iteration and optimization, reducing development costs while maintaining high safety and performance standards.

Boost synthetic data generation for physical AI with Gen AI

Integrating digital twins with SDG in a cloud environment provides a scalable robotic testing and development solution. Digital twins enable precise simulations of real-world systems, supporting multi-team collaboration and parallel testing. This approach accelerates development cycles by validating multiple scenarios simultaneously, reducing time and costs. AWS Cloud-based solutions are particularly effective in applications where diverse datasets and conditions can be tested efficiently to optimize performance and workflows.

NVIDIA Isaac Sim offers realistic, physics-based simulations that enable detailed validation and testing of robotic systems in virtual environments, closely mimicking real-world conditions. The USD Search NVIDIA NIM microservice and NVIDIA Omniverse Replicator enhance SDG pipelines by automating asset retrieval and dataset generation. USD Search employs AI-driven methods to efficiently locate 3D assets, while NVIDIA Omniverse Replicator randomizes attributes like object positioning and textures. Combined with Generative AI, these tools help ensure datasets reflect various scenarios and conditions.

Gen AI amplifies these benefits by automating key steps in SDG workflows. It introduces procedural variation in object attributes such as size, material, and lighting, creating diverse, high-fidelity datasets while minimizing manual effort.

This integration supports dynamic asset management and adaptive scene customization, which is crucial for industrial robotics applications. By combining cloud infrastructure, Gen AI, and advanced SDG tools, these workflows deliver efficient, scalable, and versatile solutions for modern AI and robotics.

Conclusion

SDG and simulation-first methodologies are changing AI and robotics forever, enabling faster, safer, and more cost-effective development. By leveraging advanced tools like NVIDIA Omniverse Replicator, Isaac Sim, the USD Search NIM microservice, and AWS cloud-based digital twins, organizations can generate diverse, high-fidelity datasets and optimize workflows for real-world applications. These technologies accelerate innovation and offer scalability and robustness, helping drive progress across industries.

SoftServe stands at the forefront of this transformation, delivering cutting-edge solutions that combine SDG expertise with deep technical knowledge.

Learn more about how SDG and simulation-first approach can give your organization a competitive advantage. Access our demo page