Benchmarking Stereo-Matching Algorithms for Space Use Cases on Radiation-Hardened AMD APU Edge Computing Device

Introduction

New space missions to the surface of the moon, Mars, and asteroids are increasingly reliant on advanced autonomous systems to meet their growing demands. This trend was highlighted in 2023 when autonomous systems and robotics were identified as key components of NASA’s Moon to Mars architecture. Autonomous systems have a wide range of applications, from exploring the permanently shadowed regions on the moon to assisting astronauts and navigating distant celestial bodies such as asteroids, Mars, Venus, and Saturn’s moon Titan.

To ensure safe and effective autonomous traversals of mobile robots, implementing fast and robust perception methods is essential, as these methods enable the spacecraft to reason about the surrounding environment. One of the challenges with integrating perception algorithms is that they are computationally intensive. For that reason, they often must be implemented on dedicated field-programmable gate arrays (FPGAs) and must undergo optimizations to fit into the tight requirements of space-grade hardware. This not only increases development time but also introduces additional integration risks and adds complexity to the system.

In this article, we delve into how the rad-hardened SEP Space Edge Processor by Blue Marble Communications Inc., equipped with the “dacreo” software stack of BruhnBruhn Innovation AB (BBI), can be utilized to run sophisticated perception pipelines without the need for specialized hardware. Our evaluation involves benchmarking various classical and AI-based stereo-matching algorithms on the test unit. We measure the processing time of each method on a representative development device, and assess algorithm quality by calculating accuracy on two datasets — the first is an analog POLAR Stereo Dataset, and the second is a photorealistic dataset generated in a custom environment based on NVIDIA Isaac Sim. Both datasets aim to simulate the conditions of the lunar south pole.

Our tests concluded that the Space Edge Processor’s AMD APU architecture can utilize GPU acceleration to run a local stereo block matching algorithm in less than 30ms (resolution 864x640). Moreover, we found that it can run Fast-ACVNet+, a deep-learning-based stereo algorithm in around one second. These results may eliminate the need for a dedicated FPGA implementation in some scenarios. Finally, a comparison of the quality of the created stereo maps showed that deep stereo methods provide a promising alternative to classical methods for certain applications.

Before reading this article, consider checking our white paper to learn more about the Space Edge Processor.

The importance of stereo matching

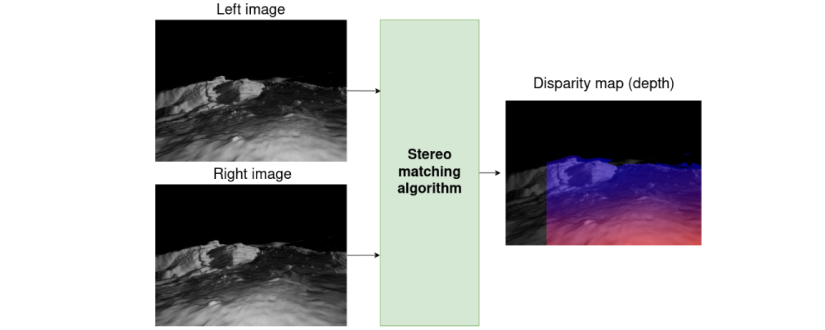

The goal of stereo matching is to create a map that estimates the distance between a robot and objects in its environment. It works by determining the horizontal shift (disparity) between the same pixels in the left and right cameras.

A simplified view of the role of stereo matching algorithms. A produced disparity map can be used to create a depth map of the environment.

Stereo-matching algorithms were already used in various autonomous navigation systems of planetary rovers, for instance, in JPL’s Mars Rovers Spirit and Opportunity, Curiosity, and Perseverance. They were also used to aid the teleoperation of lunar vehicles, which was demonstrated by Yutu rovers of China and will be used in the upcoming NASA VIPER lunar rover mission. Another example use case for stereo matching is mapping changes in the lunar surface after interaction with the spacecraft’s engine plume. This experiment was planned with the SCALPSS payload, installed onboard the IM-1 lunar lander.

The methods used these days in the space industry belong to a group of classical methods. Such methods (e.g., block-matching algorithm) are well-studied, deterministic, and relatively efficient. They often rely on multiple pre-processing and post-processing steps to obtain an accurate disparity map. However, they may perform poorly on texture-less surfaces and may be prone to specular reflection. In fact, such conditions hindered the computer vision algorithms of the Ingenuity Mars helicopter, which experienced difficulties navigating over a visually featureless dune field in its final flights.

Recent advancements in machine learning and artificial intelligence led to a new group of methods called deep-stereo algorithms. These algorithms outperform classical methods in stereo-matching benchmarks such as KITTI 2015. They can help to achieve invariance to image conditions such as lighting and noise, which can be useful for low-light lunar polar environments. They can also extract meaningful and relevant features from images, requiring less transformation engineering and parameter tuning. These approaches are not used in space missions yet due to their computational complexity and a certain level of indeterminism. However, they may find their applications in the future, as they allow for the generation of denser stereo maps using fewer images.

In general, stereo-matching algorithms are computationally intensive. Therefore, they can be used to benchmark how radiation-hardened compute solutions, such as Blue Marble’s Space Edge Processor, may help increase the capabilities and robustness of autonomous navigation algorithms for space use cases.

Benchmark methodology

The benchmark is aimed to evaluate the performance of the AMD 7nm ZEN 2/VEGA APU devices found on Blue Marble Space Edge Processor and the suitability of various stereo algorithms for space applications. To achieve this, we selected several stereo-mapping methods of varying computational complexity. Each algorithm underwent three tests:

- Measurement of processing time on a reference system using AMD 7nm APU.

- Evaluation of algorithm quality using POLAR Stereo Dataset — an analog dataset generated in the laboratory, aiming to recreate the conditions at the poles of the moon.

- Evaluation of the algorithm quality using a synthetic image dataset created in a photorealistic simulation, imitating the visual conditions of the lunar south pole.

The algorithms considered in the study are summarized in the table below.

| Algorithm Name | Short Description | Links |

|---|---|---|

| Local Block Matching (LBM) | Local Block Matching (LBM) is a classical, widely adopted, and fast stereo-matching algorithm. This method estimates the depth map by finding matches between blocks of pixels in the left and right camera images. | Docs |

| Semi-Global Block Matching (SGBM) | Semi-Global Block Matching (SGBM) is a classical method that introduces an additional cost function to LBM, that penalizes discontinuities in created maps. It is slower than LBM but can create more accurate depth representations. | Paper | Code |

| Cascade LBM | Cascade LBM executes the LBM algorithm on multiple image resolutions (1,1/2,1/4,1/8) and merges the result into a single depth map. | Docs |

| Cascade SGBM | In this method, SGBM is run on multiple image resolutions (1,1/2,1/4,1/8) and results are merged into a single depth map. | Code |

| Hitnet | Hitnet employs deep learning-based feature descriptors and confidence estimators to produce stereo maps. The algorithm pulls out relevant information from images using convolutional neural networks and evaluates tile hypotheses on multiple resolutions to yield accurate depth values. | Paper | Code |

| Fast-ACVNet+ | This is a deep stereo method that employs novel attention concatenation volume. The method uses intensive operations (such as 3D convolutions) but employs multiple techniques (e.g., fine-to-important sampling) to enable real-time performance. | Paper | Code |

| IGEV | This deep stereo method leverages 3D convolutions to encode geometry and contextual information via combined geometry encoding volume. It iteratively refines the estimated depth value with recurrent ConvGRU modules to accurately estimate stereo maps. | Paper | Code |

The code for each method has been adapted to operate on the 7nm AMD APU devices found on Blue Marble Space Edge Processor (SEP) and utilize GPU acceleration. The deep learning algorithms were executed as-is, using publicly available checkpoints without fine-tuning. The maximum disparity for each algorithm differed but was always higher than 255. The neural networks were JIT-compiled into optimized kernels to speed up inference. Output from each algorithm was post-processed with a simple background filter to remove matches on completely dark surfaces. Additionally, for classical algorithms, CLAHE and SOBEL transformations were applied as a pre-processing step.

The benchmark was performed on a test unit equipped with an AMD Ryzen 7 4800U APU, which is almost identical to the AMD V2748 device found on the SEP, 32 GB DDR4, and a BBI-tailored ROCm 5.7.1 software stack. Each algorithm processed a test image pair (864x640) 10 times, with the average processing time recorded.

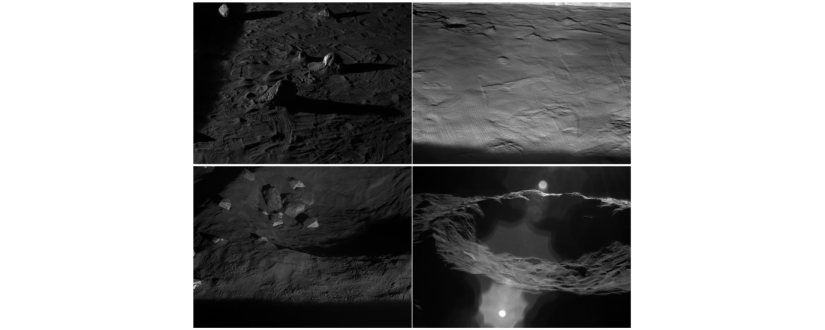

The suitability of algorithms for space applications was assessed using Polar Optical Lunar Analog Reconstruction (POLAR) Stereo Dataset published by researchers from NASA Ames Research Center. In our study, stereo pairs from terrains 1, 6, 11, and 12 were picked as they represent diverse conditions present on the surface of the moon. Exposure times varied from 64 to 512 milliseconds. For each image, a crop around the center of size 864x640 was taken and included in the final dataset.

Sample images from POLAR Stereo Dataset

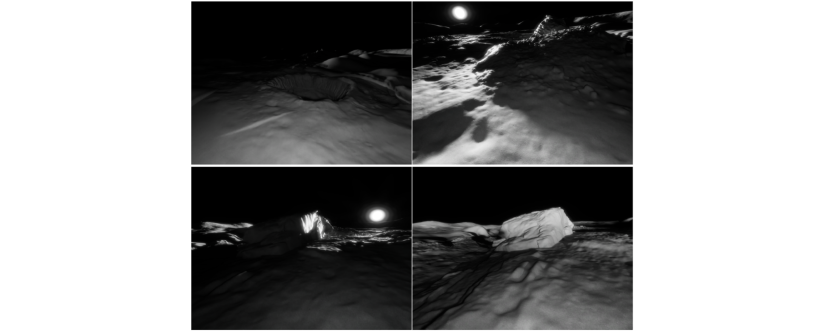

Algorithms were also evaluated using a photorealistic, synthetic lunar dataset created in NVIDIA Isaac Sim. This dataset includes over 40 image pairs in 864x640 resolution, simulating lunar south pole conditions with varying lighting, material, and rendering properties. The synthetic dataset focuses on evaluation over larger distances.

Sample images from the synthetically generated dataset

The quality of algorithms was assessed using metrics including the detected pixel percentage, average pixel error, and misclassified pixel percentage, calculated for both synthetic and POLAR datasets. In the results, the misclassified pixel percentage is denoted as “Bad N%.” For instance, we define “Bad 2%” as the percent of pixels where the relative difference between true and predicted disparity is higher than 2%, which roughly corresponds to tolerating a 20cm error on a 10m distance. These metrics were calculated separately for POLAR Stereo Dataset and the synthetic dataset. Note that for the synthetic dataset, pixels in the sky, if classified as having finite depth, are excluded from “Bad N%” metrics. Such occurrences are quantified in another metric — the percentage of false positives.

Results

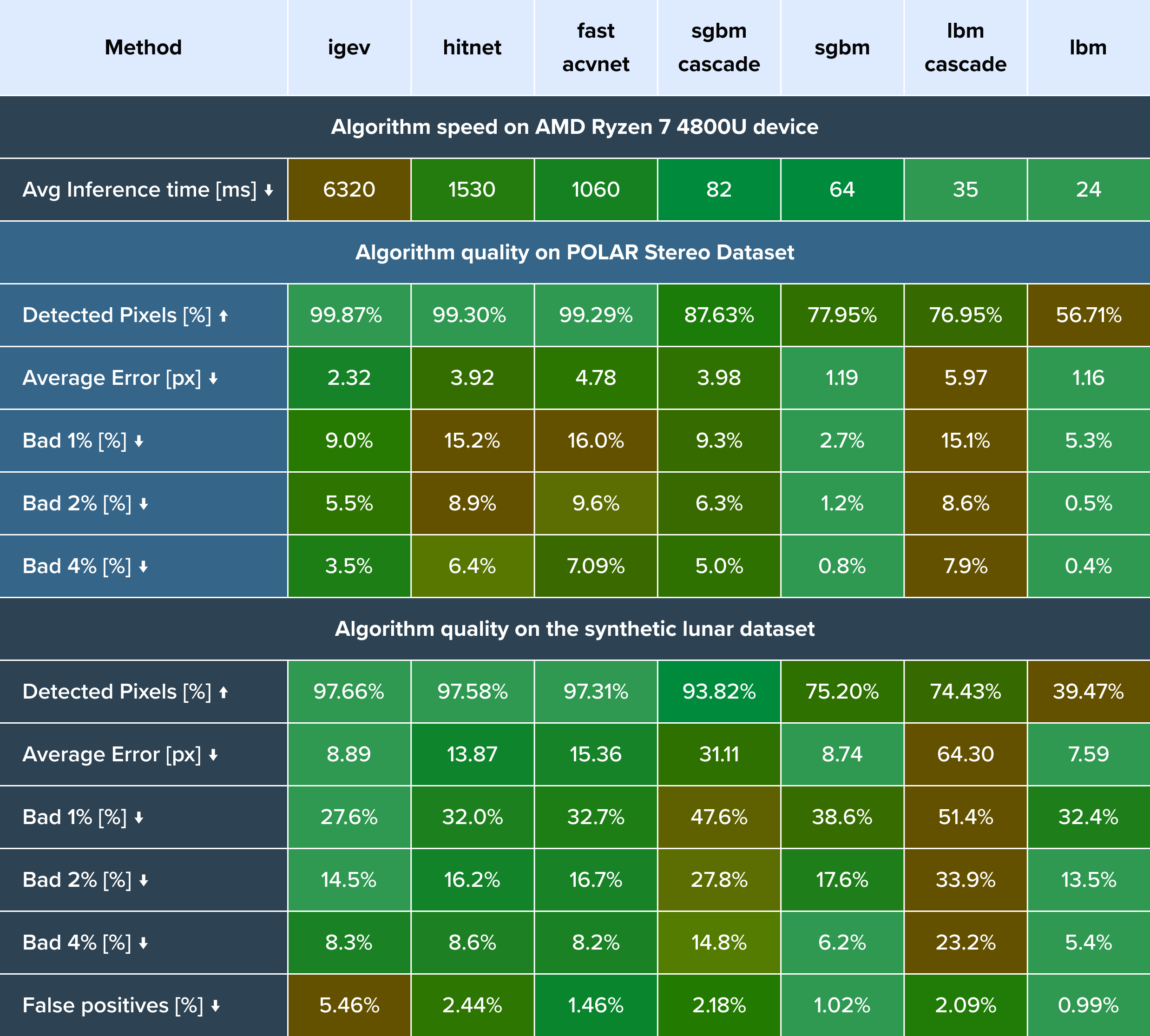

The resulting processing times and metrics capturing the quality of different algorithms are summarized in the table below.

The table with results for three experiments. ↑ means higher values are better, while ↓ indicates that lower values are better.

The table reports on average inference time, the average results on POLAR Stereo Dataset, and the average results on the synthetic lunar dataset generated in NVIDIA Isaac Sim. All methods were successfully implemented and executed on the test unit. The classical methods demonstrated real-time performance on solutions like the Space Edge Processor, while deep learning methods were shown to be suitable for scenarios with higher latency tolerance. All algorithms run on the device's integrated GPU, eliminating the need for heavy CPU usage or external vision-processing boards and leaving the CPU resources for interrupting critical tasks.

The inference times obtained for deep stereo algorithms make them applicable to certain space-edge computing use cases. It was, however, observed that several operations used by these algorithms create unanticipated bottlenecks in processing time on the test device. For example, disabling dilated convolutions in the Hitnet method lowered the inference time from 1530ms to 900ms. This could be attributed to the GPU’s memory constraints or inefficiencies in the adapted version of the AMD ROCm stack. It was also observed that 3D convolutions take disproportionately longer than on a PC-grade GPU (Nvidia RTX), slowing down IGEV and Fast-ACVNet+ algorithms. A possible reason is that as per release notes from AMD, support for 3D convolutions was added in ROCm 6.0.0. Nevertheless, the results obtained for deep algorithms can be satisfactory for certain applications.

The results on both datasets indicate that the most computationally demanding methods yield the highest number of matching pixels, leading to dense stereo maps. Furthermore, deep learning methods can generate more accurate depth maps on lunar-like datasets than classical cascade methods. While non-cascade LBM and SGBM can accurately estimate depth, they typically detect fewer pixels, compromising the overall algorithm quality. This could be due to difficulties in detecting texture-less regions on lunar surface images present in the dataset. Achieving better results with classical methods is probably possible but would require longer fine-tuning and parameter search.

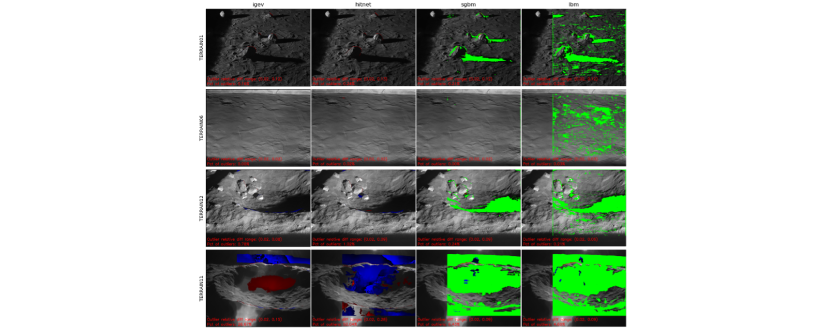

For POLAR Stereo Dataset, it was observed that deep stereo algorithms usually surpass classical approaches for images with no direct sunlight pointing toward the camera. For images with sunlight, these algorithms tend to generate more outliers than classical methods, but they provide much denser stereo maps from a single image pair. Additional thresholding techniques and picking appropriate exposure time could be applied to filter out matches in visually poor regions and reduce the number of incorrect matches. Sample results for POLAR Stereo Dataset are in the figure below.

Stereo mapping results for 4 chosen algorithms, on 4 chosen images from POLAR Stereo Dataset. Green pixels show where depth couldn’t be determined. The red pixels show where the predicted depth was lower than the actual depth. Blue pixels show where the predicted depth was higher than the actual depth.

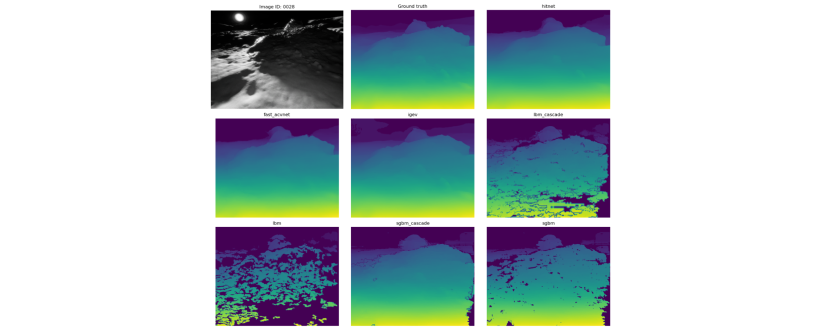

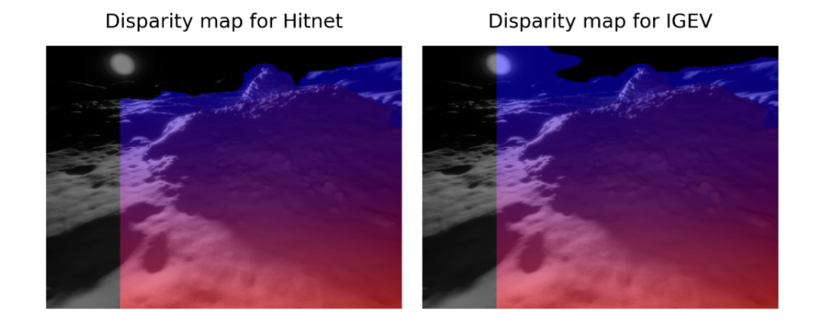

While the IGEV algorithm performed the best in detecting pixels on the synthetic dataset generated in NVIDIA Isaac Sim, it often misclassified lunar sky patches as scene objects, particularly when the sun was visible in the image, leading to false positives. Other algorithms, such as Hitnet or Fast-ACVNet+, resulted in fewer false positives on average on synthetic datasets. A basic background thresholding method was employed to counteract these matches, but more advanced techniques or fine-tuning would be necessary for robust filtering of such regions. We also observe that while IGEV performs the best on average, other algorithms outperform it for certain images. The figure below illustrates sample results for a synthetic dataset, including false positives occurring around the sun.

Comparison of algorithm outputs for a single image from a synthetic lunar dataset

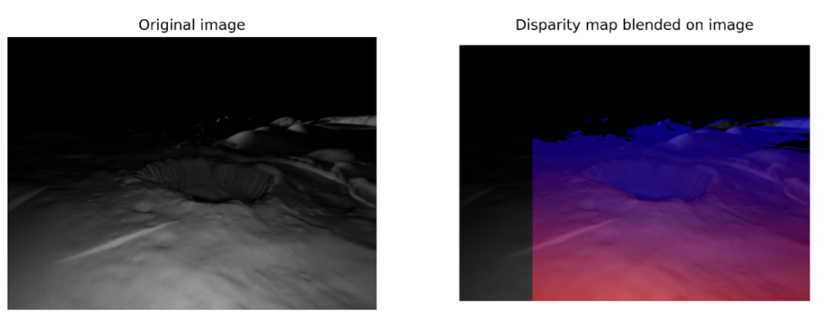

Sample disparity map obtained with IGEV algorithm for a synthetic image. The red spots are closer to the camera than the blue pixels.

The IGEV algorithm produces false positives around the sun. Pixels in the sky are wrongly classified as objects in the scene.

Conclusion

In this work, we showed our approach to benchmarking the inference time and accuracy of computer vision algorithms for space robotics use cases. Specifically, on computer architectures found on the new radiation-hardened Blue Marble Communications Space Edge Processor (SEP) compute unit equipped with BruhnBruhn’s dacreo software stack. We evaluated various stereo-matching algorithms in terms of speed by running them on SEP. We also benchmarked the same algorithms in terms of quality using data from the POLAR Stereo Dataset and photorealistic simulation.

We conclude that classical stereo-matching algorithms, such as Local Block Matching and Semi Global Block Matching can run on the Blue Marble hardware with real-time performance, with only minor tweaks to publicly available code. This may remove the need for FPGA ports, speeding development time and reducing risks. Moreover, the available computing capacity enables the utilization of multiple stereo pairs in real time, for increased robustness and scene coverage.

The processing power of Blue Marble Space Edge Processor allows for running more advanced, AI-based algorithms for stereo matching directly on the edge. Although the inference time for AI-powered methods is higher than for classical algorithms, the coverage and quality of created disparity maps surpass classic cascade approaches, making them a promising alternative for certain scenarios. AI-powered algorithms may be suitable for use cases where the quality and coverage of the created map are significantly more important than latency. Such use cases may include near real-time creation of maps of environments for science or mid-range path planning. It is important to mention that even for the best-performing algorithms, in some cases there can be false positives occurring (e.g., around the sun). Apart from that, we noticed that there is a variance in deep learning algorithm performance, depending on the conditions of the image. This could be because these algorithms were trained using Earth data, vastly different from stereo pairs present in test datasets. For those reasons, further transformations and fine-tuning on data simulating lunar south pole would be required to increase the robustness of algorithms.

The simulation environment based on NVIDIA Isaac Sim used in the study can be tweaked to accommodate multiple parameters, such as lighting intensity, material properties, or camera properties. This enables testing the robustness of a variety of computer vision algorithms in lunar conditions. The synthetic data generator pipeline allows for capturing scene properties to evaluate algorithms and potentially fine-tune them using lunar-like data.

The future work may include additional optimizations and upgrades to further reduce the latency of deep stereo algorithms on Blue Marble’s Space Edge Processor. It would also be interesting to examine how the quality of deep stereo methods changes after fine-tuning on a large-scale lunar dataset, which could be generated using NVIDIA Isaac Sim. Also, a more comprehensive analysis of the results for the POLAR dataset should be conducted. Instead of just discussing average outcomes, the results should be grouped and analyzed quantitively by multiple categories (e.g., terrain, lightning, exposure time) to gain deeper insights into each algorithm’s performance and limitations.

If you want to talk in detail about algorithm configuration, datasets, and results, or you would like to learn more about potential use cases and applications, feel free to get in touch with us.