Don't want to miss a thing?

The gradual shift from 2D to 3D imaging in various fields indicates a growing demand for 3D object creation. 3D imaging techniques fall into two main groups: active and passive. Active algorithms, which involve direct interaction with the objects being measured, are typically more precise and robust than passive ones.

TIME-OF-FLIGHT (TOF) CAMERAS

ACTIVE STEREOSCOPY

STRUCTURED LIGHT

Structured light is the perfect solution for short (and very short) active scanning ranges. The algorithm has impressive accuracy, helping it reach 100 μm indoors. This technology cannot be used for dark or transparent objects, nor for objects with high reflectivity or in variable lighting conditions.

Structured light is a specially designed 2D pattern, often represented as an image. Many pattern types exist, and the choice depends on the application. The most basic approach uses Moiré fringe patterns — multiple images of sine patterns shifted by different phases.

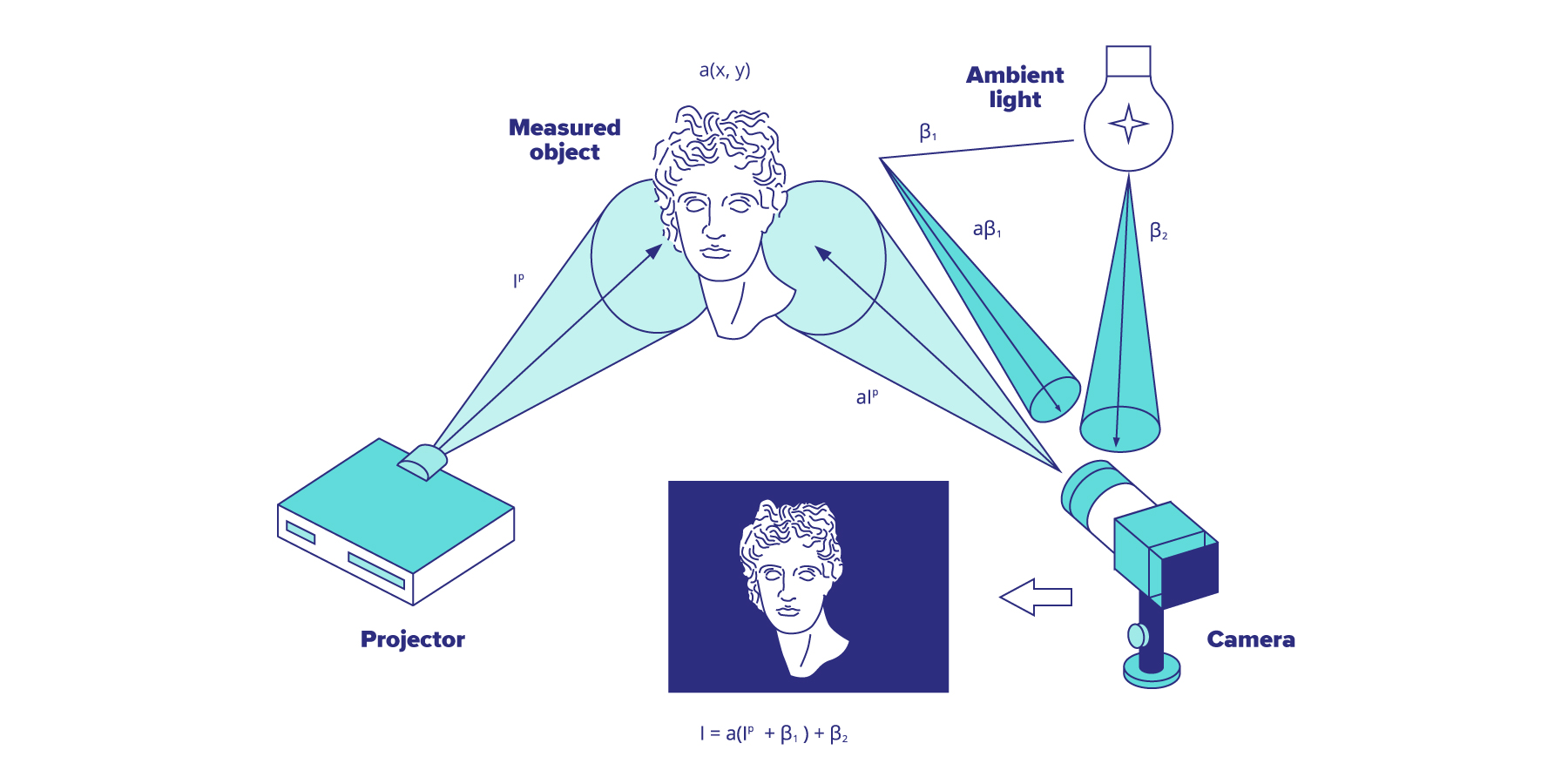

The simplest setup for a structured light system is illustrated below, where a pattern is projected onto an object, and a camera captures the resulting superposition. The key step of the algorithm is to recover the 3D shape of the object from these camera images. While infrared or visible light is preferable, the algorithm is not limited to a specific light spectrum.

USE CASES

With high-quality, real-time 3D scanning, real-world objects can be quickly captured and accurately reconstructed — 3D imagery only increases the wide range of applications for such imaging technologies. Imaging technologies are used ubiquitously, from cameras in multiple interface devices for video chats to doctors using an endoscope for human cell and tissue observation and collection.

Structured-light 3D technology opens a world of opportunities:

- Medicine: Facial anthropometry, cardiac mechanics analysis, and 3D endoscopy imaging.

- Security: Biometric identification, manufacturing defect detection 3D, and motion-activated security cameras.

- Entertainment and HCI: Hand gesture detection, expression detection, gaming, and 3D avatars.

- Communication and collaboration: Remote object transmission and 3D video conferencing.

- Preservation of historical artifacts.

- Manufacturing: 3D optical scanning, reverse engineering of objects to produce CAD data, volume measurement of intricate engineering parts, and more.

- Retail: Skin surface measurement for cosmetics research and wrinkle measurement on various fabrics.

- Automotive: Obstacle detection systems.

- Guidance for the industrial robots.

OUR APPROACH

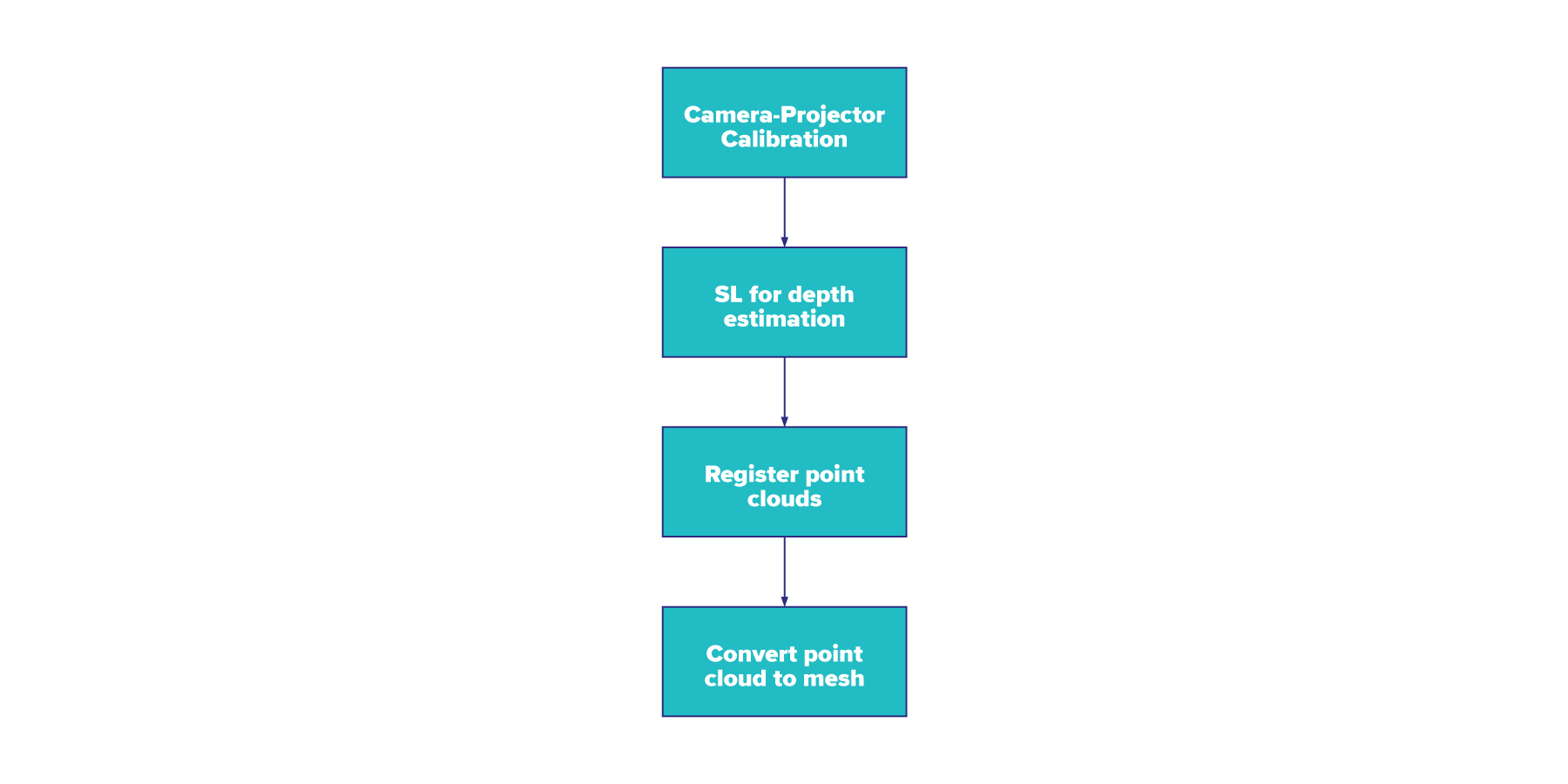

The structured-light approach inspects the surface or sides of an object. Our goal is to acquire an object’s full shape by capturing it from different perspectives and then assembling the complete picture.

We started by generating images using the Unity engine and creating a full 3D recognition pipeline. The process can be mapped to real-world applications easily and quickly. Currently, we are working on the hardware part to recognize real objects. Our method consists of six main steps:

- Calibrate the camera-projector pair

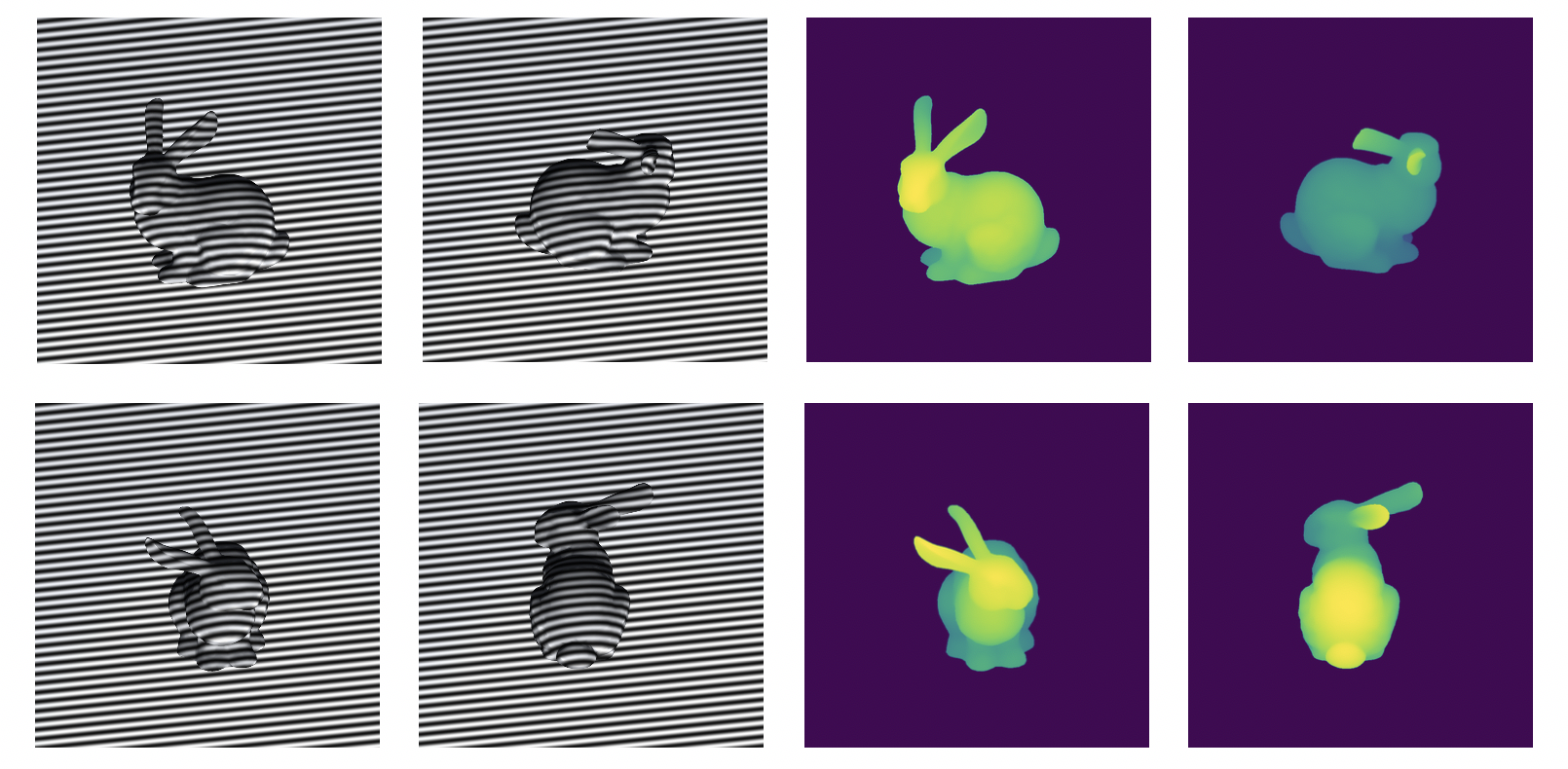

- Project multiple Moiré patterns onto the object from different views (Fig. 2a)

- Compute depth information of the object for each view (Fig. 2b)

- Remove background and extract the point cloud of object parts

- Register point clouds from multiple views into a single one (Fig. 2c)

- Convert the point cloud into a mesh (Fig. 2d)

Our goal is to create an algorithm with Compute Unified Device Architecture (CUDA) support and port it to the NVIDIA® Jetson Nano™ Developer Kit. We can apply neural networks at each step to improve the performance and accuracy of the algorithm or to remove its limitations.

Contact SoftServe today to learn more